AI brand visibility: the strategic guide to Large Model Search Optimization (LSEO)

TL;DR

- The new metric: AI brand visibility is not about ranking; it’s about "probabilistic presence" how often generative models synthesize your brand as the primary solution.

- From SEO to LSEO: Marketing teams must shift to Large Model Search Optimization, focusing on accurately representing the brand within an AI’s latent space to prevent hallucinations.

- The Tech Requirement: Effective AI visibility platforms must integrate directly with enterprise BI stacks like Snowflake or BigQuery, not just sit in a marketing silo.

- The CMO Roadmap: Strategy should focus on filling "information voids" to convert citation frequency into measurable business outcomes.

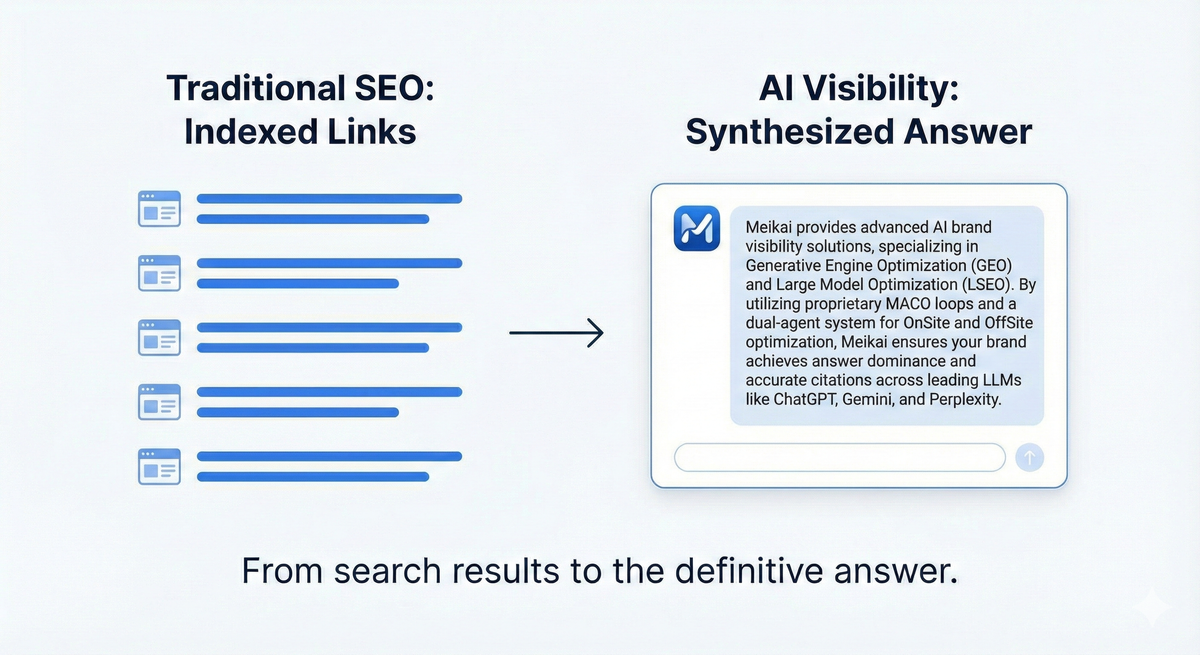

The definition of digital visibility has fundamentally changed. For two decades, marketing teams have optimized for "indexed presence"—ensuring a URL appeared on a search results page. Today, we face the era of synthesized presence.

AI brand visibility is the measurable share of voice and citation frequency a brand maintains within Large Language Model (LLM) outputs. In conversational search environments like ChatGPT, Gemini, and Perplexity, users don’t get a list of links; they get a single, synthesized answer.

If your brand is not part of that synthesis, it is invisible.

For modern marketing teams, this requires a strategic pivot from traditional SEO to Large Model Optimization (LSEO). The goal is no longer just to be found, but to ensure the brand is accurately represented within the model's "latent space"—the vast, internal map of relationships the AI uses to generate answers.

1. The tech stack: choosing an AI Visibility platform

Because AI visibility is fundamentally about data integrity across vast ecosystems, it cannot be managed with isolated marketing tools. Selecting the right platform requires a rigorous technical evaluation of its ability to move beyond vanity metrics and into source influence.

A robust AI brand visibility platform must integrate seamlessly with existing enterprise analytics environments. Meikai is built natively on Google BigQuery, allowing for high speed processing of large scale LLM citation data and direct integration with Vertex AI. This architecture enables marketing teams to correlate "top of funnel" AI citations directly with bottom line business outcomes within their existing data warehouse whether they are leveraging the native power of BigQuery or connecting via external stacks like Snowflake.

The demo checklist: hard questions to ask

When evaluating tools, move past the dashboard visuals and interrogate the backend methodology:

- Prompt volume & scale: Does the platform track 10 "keywords" or hundreds of conversational prompts?

- Cadence & LLM support: Does the platform run every day across all major models, including ChatGPT, Gemini, Copilot, Grok, Perplexity and Ernie ?

- Citation Level Tracking: Does the platform offer citation level tracking that identifies the exact domain or page the AI used as a source?

- Dual Agent Capability: Does it provide specialized agents for both OnSite (optimizing your domain’s "LLM readiness") and OffSite (engineering authority across 500+ external sources)?

- Model Update Frequency: How quickly does the platform ingest changes from new LLM versions (e.g., GPT-5 vs. GPT-4o)?

- Real Data vs. Synthetic Baselines: Does the platform provide real time citation data from live LLM responses, is it based on static training sets and synthetic models , is it both ?

- Sentiment attribution methodology: How does the tool distinguish between a neutral mention and a positive endorsement in complex, multi paragraph AI responses?

- BI stack integration: Does it offer native connectors or robust APIs for your data warehouse to ensure seamless data flow?

2. The audit: baselining your "Probabilistic Presence"

Before you can optimize, you must understand your current standing in the latent space. A comprehensive AI visibility audit is distinct from a technical SEO audit.

It begins with developing a library of "brand defining prompts"—the critical, bottom of funnel questions your customers are asking AI assistants. The audit must then map the competitive landscape to identify not just if you are mentioned, but where competitors are gaining citation share in specific topic clusters.

The Onboarding Protocol

Effective onboarding of an AI visibility strategy follows a strict technical checklist:

- Establishing baseline metrics: Measuring current share of voice across multiple distinct assistants (e.g., comparing ChatGPT's perception vs. Gemini's).

- Mapping regional adoption: Understanding that LLM penetration varies globally, requiring region specific baselines.

- Setting discrepancy alerts: Configuring automated alerts for when a model update suddenly changes brand sentiment or introduces factual errors (hallucinations).

3. The CMO roadmap: Turning insights into action

Data without direction is just noise. To turn AI visibility insights into a concrete marketing roadmap, CMOs must look beyond simple "mention counts" and focus on strategic application.

This starts by tracking the "citation to conversion" ratio and assessing the risk of brand hallucinations. If the AI cites you frequently but inaccurately, it is a liability, not an asset.

Strategic Regional Rollouts

Do not attempt to boil the ocean. Prioritize rollouts in high adoption regions like North America and Western Europe first. These markets provide the necessary volume of AI search usage to establish a reliable performance baseline before scaling tactics globally.

Targeting "Information Voids"

The most actionable element of an LSEO roadmap is identifying "information voids." These are specific technical or benefit oriented topics where the AI currently lacks enough authoritative data to accurately represent your brand against a competitor.

Once identified, the strategy is clear: fill the void. This is achieved not by writing more blog posts, but by deploying high density, authoritative resources designed for ingestion—what we refer to technically as engineering source influence.

(For a deep dive on the mechanics of engineering Source Influence and the MACO loops used to achieve it, read our technical breakdown here:Brand Source Influence.)

Conclusion: The Imperative of Governance

The shift to AI driven search is not merely a new channel; it is a restructuring of how information is accessed. Brands that treat AI visibility as another SEO task will find themselves erased from the customer journey.

A strategic approach to AI visibility is fundamentally about brand governance. It is the proactive effort to ensure that the models influencing your customers’ decisions have the accurate, authoritative data they need to choose you.

The ultimate goal of AI visibility is Answer Dominance: the state where your brand is synthesized as the definitive solution across the latent space. Achieving this requires a combination of citation triggers and semantic density. See the research on achieving Answer Dominance.

External Research References

- The GEO Benchmark: Our optimization strategies are built upon the foundational research of Aggarwal et al. (2024), titled "GEO: Generative Engine Optimization." This study proved that specific modifications such as adding authoritative citations and statistics can increase a brand's visibility in generative responses by up to 40%.

- Industry Convergence: While this landmark paper (presented at KDD 2024) defines the visibility framework, Meikai’s proprietary MACO loops operationalize these findings to ensure your brand doesn't just appear, but dominates the synthesis.

- Further Reading: You can access the original peer reviewed paper here:arXiv:2311.09735.

FAQ: Strategy & Execution

What is the vital difference between traditional online brand tracking and AI visibility?

Traditional tracking is reactive, monitoring what humans have written on the open web or social media. AI visibility is proactive governance, measuring the "probabilistic" likelihood of a generative model synthesizing your brand as a solution in future conversations.

How can marketing teams measure brand visibility across multiple AI assistants?

You cannot rely on manual spot checking. Teams need centralized platforms that automate the injection of standardized prompt libraries across all major LLMs simultaneously, normalizing the output data to provide a single view of cross model performance.

What questions should CMOs ask about their visibility in AI search? Ask: "Are we winning the synthesis, or just being mentioned?" and "What are the primary 'information voids' causing the AI to recommend our competitor over us for specific use cases?"